It’s just been a few months since Llama-2 has been open-sourced and already there are several labs doing some foundational research and enhancing our understanding our LLMs .

Earlier today, Wes Gurnee and Max Tegmark published an exceptionally intriguing paper.

Fundamentally, they dive into how much LLMs really understand the data they are trained on and how much of it is just fancy statistics.

The find that LLMs are much more than "stochastic parrots" and learn linear representations of space and time.👈

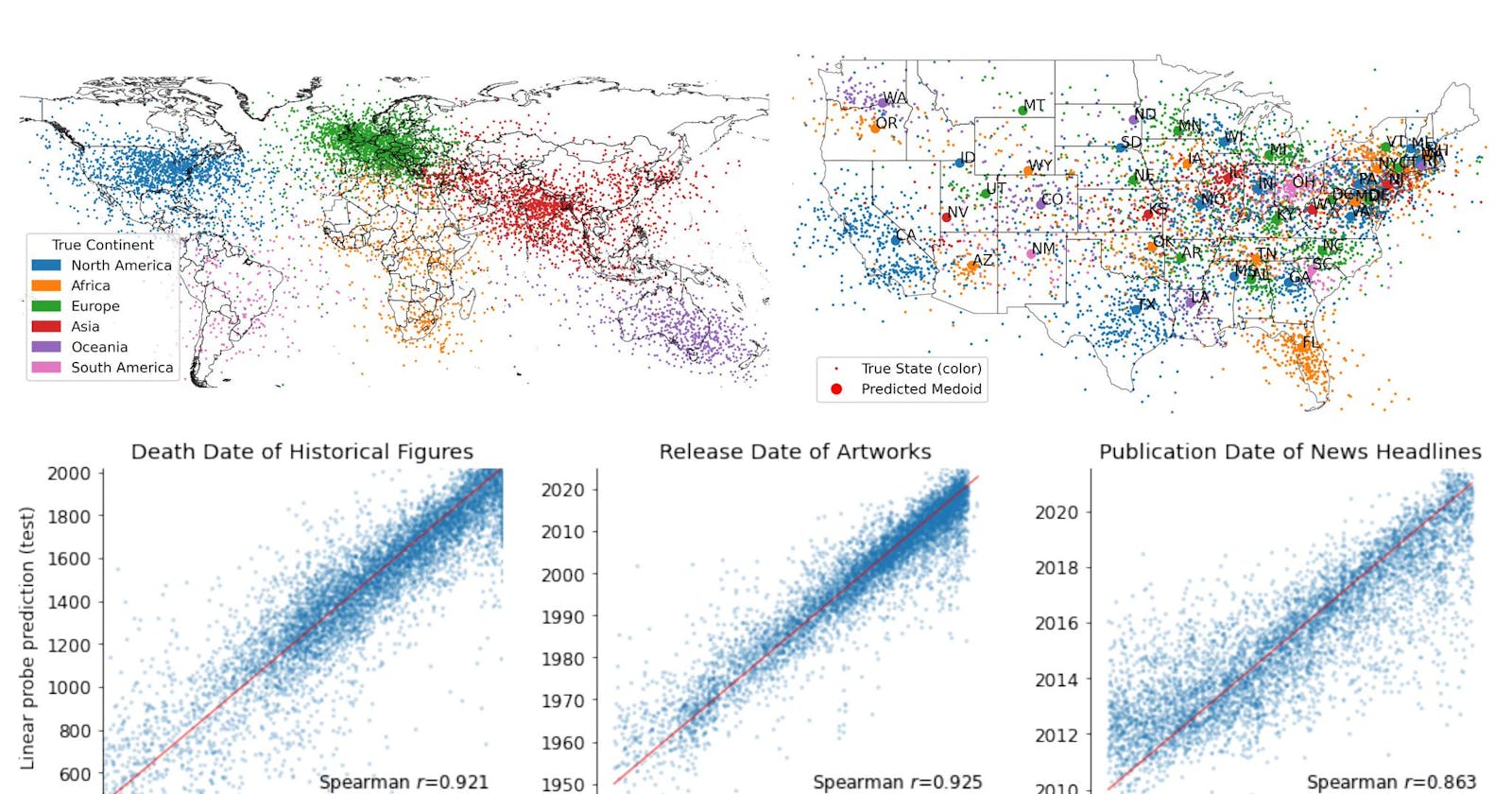

The researchers analyzed the learned representations of LLMs on three spatial datasets (encompassing world, US, and NYC places) and three temporal datasets (comprising historical figures, artworks, and news headlines) within the Llama-2 family of models.

Their analysis demonstrates that modern LLMs acquire structured knowledge about fundamental dimensions such as space and time, supporting the view that they learn not merely superficial statistics, but literal world models.🌹

LLMs they find learn linear representations of space and time across multiple scales. These representations are robust to variations in prompts and unified across various entity types like cities and landmarks .

The paper mentions the identification of individual "space neurons" and "time neurons" within Large Language Models (LLMs).

These specific neurons are found to reliably encode spatial and temporal coordinates respectively, showcasing how the LLMs have structured, inherent mechanisms to represent and understand fundamental dimensions like space and time in the data they process.

This paper is an interesting step towards how LLMs can learn and represent complex, real-world information in a structured and meaningful manner. While this doesn’t mean that we suddenly have stumbled into AGI :), it does contribute to our understanding of why LLMs are so exceptionally performant and delightful...

A huge congrats to the authors and I am hopeful this is just the one of the many papers about this subject that will be.

The more we understand how LLMs work, the more we can dispel the fear, superstition and apprehensiveness about AI research and the faster we can make progress.😭

Thanks in advance 💐

.

.